This work enables Augmented Reality applications to operate in unknown environments. The environment is mapped simultaneiously with localisation of the camera using a SLAM system which also assists the user in the placement of authored annotations. A key application domain for this technology is the provision of remote expertise. The system supports this use by communicating the video and annotations over a network link, thus providing an enriched communication channel between user and remote expert.

[2007 ISMAR Paper]

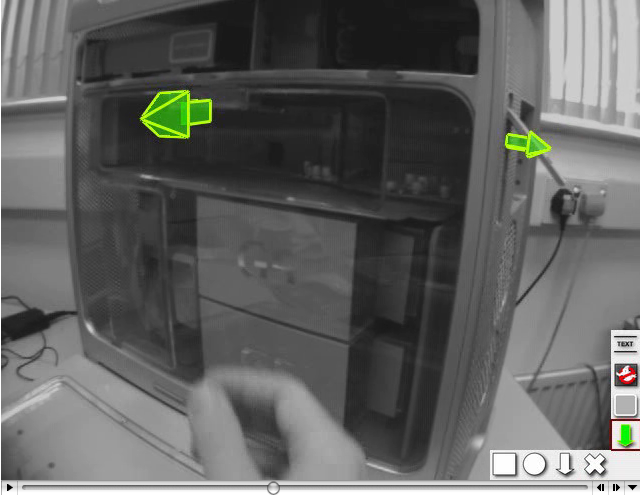

Fig.1: Left to right: Selecting a disk bay on a computer ; placing a note that this disk should be replaced; the system estimates the 3D location of the note automatically and renders it correctly registered to the environment.

Fig.2: Information flow between the main system components. SLAM estimates basic camera pose and 3D point map. The augmentation component renders annotation overlays. User interaction defines new landmarks, while SLAM updates annotations.

[2007 ISMAR Paper]

Augmented reality applications depend heavily on geometrical knowledge about the world to fuse computer graphics with real world environments. Recent work in real-time visual simultaneous localisation and mapping (SLAM) allows a system equipped with single camera to create a model of the user's environment without any prior knowledge. One scenario, where this is of interest, is remote collaboration in unknown environments, where a remote expert annotates a local live view to support a mobile user. This is also the target application of this project.

|  |  |

Fig. 1 shows an example of the system in operation. A remote expert wants to annotate for the service person which harddisk to replace. She is connected via a shared video and voice link and can also place 3D annotations into the scene. So, she can select the rectangular outline shape of the drive bay, and places with a single click an annotation on the front plane of the drive bay. The system estimates the 3D location of the graphics automatically and keeps the annotation aligned, even if viewed from a different angle.

This uses a monocular SLAM system developed by Ethan Eade for general pose and landmark estimation. The system is extended to provide the user with an interface to track and capture simple structures such as rectangles or circles in the view. Selecting a structure for annotating, the user interface component sends the description of the observed structure to the SLAM system, introducing a new landmark into the map which is measured accurately during subsequent frames (see Fig. 2). These complex landmarks capture more information at once but do not require more state to be estimated.

Fig.2: Information flow between the main system components. SLAM estimates basic camera pose and 3D point map. The augmentation component renders annotation overlays. User interaction defines new landmarks, while SLAM updates annotations.

Complex landmarks are planar structures that can be reliably tracked in the environment. For example, the rectangular outline of a poster on a wall or a sticker on an engine forms such a complex landmark. The location of the annotation itself is derived from the landmark structure, instead of being specified by the user. All parameters are linked to the landmark in the map and are continously updated as the estimate of the landmark converges.

Publications

- Gerhard Reitmayr, Ethan Eade and Tom Drummond

Semi-automatic Annotations in Unknown Environments

Proc. IEEE ISMAR'07, 2007, Nara, Japan. [BIBTEX]

Media

Videos showing a powermac repair scenario and a more complex scene where both the local user and the remote expert add annotations

No comments:

Post a Comment

Note: only a member of this blog may post a comment.